Alexis Madrigal for The Atlantic:

There was a beginning to all this, long before it became technically possible.

Well, actually, there were many beginnings.

But one — maybe the most important one — traces back to Douglas Engelbart, who died last week, and his encounter with a 1945 article published here at The Atlantic, “As We May Think” by Vannevar Bush — an icon of mid-century science.

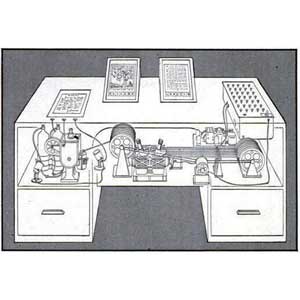

The essay is most famous for its description of a hypothetical information-retrieval system — the Memex, a kind of mechanical Evernote, in which a person’s every “book, record or communication” was microfilmed and cataloged.

“It is an enlarged intimate supplement to his memory,” Bush wrote. “It consists of a desk, and while it can presumably be operated from a distance, it is primarily the piece of furniture at which he works. On the top are slanting translucent screens, on which material can be projected for convenient reading. There is a keyboard, and sets of buttons and levers. Otherwise it looks like an ordinary desk.”

Bush did not describe the screens, keyboard, buttons and levers as a “user interface” because the concept did not exist. Neither did semiconductors or almost any other piece of the world’s computing and networking infrastructure except a handful of military computers and some automatic telephone switches.

A crucial component of the Memex was that it helped the brain’s natural “associative indexing,” so “any item may be caused at will to select immediately and automatically another.” The Memex storehouse was made usable by the “trails” that the user (another word that did not have this meaning at the time) cut through all the information, paths that could later be retraced or passed onto a friend.

“There is a new profession of trail blazers, those who find delight in the task of establishing useful trails through the enormous mass of the common record,” Bush predicted. Consider for a moment that these processes — at scale — are exactly what makes Google a good search engine or Reddit a good social news site.

Bush’s essay was a groundbreaking ceremony for the information age. In Bush’s own terms, the complexity of the world and its problems required a better system, lest our memories and minds become overwhelmed by all there was to know. And this was not merely a personal, lifestyle problem. The worst war the world had ever known was finally coming to a close, and to a man like Bush, it had begun because of a lack of human wisdom. This is how his essay ends:

The applications of science have built man a well-supplied house, and are teaching him to live healthily therein. They have enabled him to throw masses of people against one another with cruel weapons. They may yet allow him truly to encompass the great record and to grow in the wisdom of race experience. He may perish in conflict before he learns to wield that record for his true good. Yet, in the application of science to the needs and desires of man, it would seem to be a singularly unfortunate stage at which to terminate the process, or to lose hope as to the outcome.

What Bush knew when he wrote these words in the months leading up to July 1945 was that the most cruel weapon had been invented: American atomic bombs would not fall on Japan for two more months, but Bush had been intimately involved in their creation and certainly knew their use was a possibility. With that knowledge in his pocket, his answer to the prospective (and then real) horrors of science-enabled nuclear war — odd as it may seem — was to imagine a contraption to aid human knowledge acquisition.

For Bush, humans were racing against themselves: understand the complex world or face extinction through war. Those were the stakes at the outset of the information age.

Bush’s article went far and wide, and if I can brag for our magazine a little, is considered one of the most influential magazine articles ever published about technology, and perhaps in any field. It even landed inside LIFE magazine in a condensed format in September of 1945.

The Memex as imagined by a LIFE illustrator. Image courtesy of LIFE.

Millions of copies of the Sept. 10 issue were printed and distributed around the world. LIFE had established itself as the preeminent photo chronicler of World War II and the Red Cross habitually kept reading materials like it around for soldiers. And so it was that a copy of that issue, containing most of Bush’s article — including the whole Memex section and conclusion quoted here — made its way to a Red Cross library on the (even now, still remote) island of Leyte in the Philippines.

Meanwhile, young Doug Engelbart, a radar technician in the Navy who never saw combat (the war ended as his boat pulled out of the San Francisco Bay), was on his way to the Philippines, too. He was transferred to Leyte, the island, and though the record is not precisely clear on this point, perhaps to the little village called Leyte, too, at the end of a long inlet. It was here that, in the words of John Markoff, Engelbart “stumbled across a Red Cross reading library in a native hut set on stilts, complete with thatched roof and plentiful bamboo.” Five years ago, a visitor to Leyte snapped a photograph of the the town of Leyte.

In a hut like that one — and maybe even one of those huts specifically — Engelbart opened up that issue of LIFE and read Bush’s Atlantic article. The ideas in the story plowed new intellectual terrain for Engelbart, and the seeds that he planted and nurtured there over the next twenty years grew, with the help of millions of others, into the Internet you see today.

The Los Angeles Times obituary succinctly summed up his impact on the world: “Douglas Engelbart, whose work inspired generations of scientists, demonstrated in the 1960s what could happen when computers talk to one another.” Steve Wozniak went further, crediting Engelbart’s 1960s research “for everything we have in the way computers work today.” Yes, he invented the mouse, but he also laid out the concepts we’d need to understand the networked world.

So, in one tangible and real sense, the Internet we know now began in that hut across the world. As Bush made new thoughts possible for Engelbart, Engelbart made it possible for us to imagine the rest of it.

Engelbart wrote Bush a letter describing how profoundly he’d been affected by the latter’s work. “I might add that this article of yours has probably influenced me quite basically. I remember finding it and avidly reading it in a Red Cross library on the edge of the jungle on Leyte, one of the Philippine Islands, in the fall of 1945,” he wrote. “I rediscovered your article about three years ago, and was rather startled to realized how much I had aligned my sights along the vector you had described. I wouldn’t be surprised at all if the reading of this article sixteen and a half years ago hadn’t had a real influence on my thoughts and actions.”

What’s fascinating is that Engelbart adopted Bush’s frame for the key problems and solutions of modern life. They both worried that the world had gotten too big to understand. “The complexity/urgency factor had transcended what humans can cope with,” Engelbart recalled in a 1996 oral history interview. “I suddenly flashed that if you could do something to improve human capability with that, then you’d really contribute something basic.”

Framing the problem this way helped Engelbart stay away from the artificial intelligence researchers like JCR Licklider, but he also developed a framework for helping human minds to come together to improve themselves. He did not think the machines could or should do the thinking for us. Markoff, a long-time chronicler of computing, sees Engelbart as one pole in a decades-long competition “between artificial intelligence and intelligence augmentation — A.I. versus I.A.” That’s because Engelbart’s view of computing development retained a privileged place for humans. His academic biographer Thierry Bardini summed up his importance like this:

Many still credit him only with technological innovations like the mouse, the outline processor, the electronic-mail system, or sometimes, the windowed user interface. These indeed are major innovations, and today they have become pervasive in the environments in which people work and play. But Douglas Engelbart never really gets credit for the larger contribution that he worked to create: an integrative and comprehensive framework that ties together the technological and social aspects of personal computing technology. Engelbart articulated a vision of the world in which these pervasive innovations are supposed to find their proper place. He and other innovators of this new technology defined its future on the basis of their own aspirations and ideologies. Those aspirations included nothing less than the development via the interface between computers and their users, of a new kind of person, one better equipped to deal with the increasing complexities of the modern world.

A new kind of person. The words appear unseemly in a reactionary age that reifies the “real world,” but consider the root of the desire for a new humanity: Tracing Engelbart back through Bush, we find the horror of World War II and the nuclear weapons that put nearly instant human extinction on the table for the first time in human history. Mere tinkering around the edges of humanity would not have seemed up to the task.

What emerged for Engelbart as a real answer to Bush’s statement of the problem was the co-evolution of humans and technology. Knowing that machines could do some thing well, and humans others, Engelbart imagined creating interfaces that would allow both to continue improving. It is an optimistic and hopeful outlook, one that is less brittle than hoping Watson will cure disease or that humans are deracinated by our contact with the digital realm.

It seems to me that we may be sitting at a similar moment in history to the one that Bush considered. Through the first half of the 20th century, physics was generally lauded and assumed to produce societal goods. Then came the bomb, and the field had a lot of questions to answer about what its purpose was, and what its relationship should be to the military-industrial complex.

And, perhaps I’m reaching here, but networked computing technology has had a similar privileged spot in American life for at least 30 years. Networked computers democratized! Anyone could have a voice! They delivered information, increased the variety of human experience, allowed new capabilities, and helped the world become more open and connected. Computers and the Internet were forces for good in the world, which is why technology was so readily attached to complex, revolutionary processes like the Arab Spring, for example.

But a broad skepticism about technology has crept into (at least) American life. We find ourselves a part of a “war on terror” that is being perpetually, secretly fought across the very network that Engelbart sought to build. Every interaction we have with an Internet service generates a “business record” that can be seized by the NSA through a secretive process that does not require a warrant or an adversarial legal proceeding.

The disclosure of the NSA’s surveillance program is not Hiroshima, but it does reveal the latent dark power of the Internet to record communication data at an unprecedented scale, data that can be used by a single nation to detriment of the rest. The narrative of the networked age will never be as simple as it once was.

If you’re inclined to see the trails of information Bush imagined future scholars blazing as (meta)data to be hoovered up, if you’re inclined to see PRISM as a societal Memex concentrated in the hands of the surveillance state, then perhaps, we’re seeing the end of the era Bush’s article heralded.

At the very least, those with the lofty goal of improving humanity are going to have to explain why they’ve chosen networked computing as their augmentation platform of choice, given the costs that we now know explicitly exist. The con side of the ledger can no longer be ignored.

Yet, it seems possible that we have not yet fulfilled the Engelbart’s vision. Bush and Engelbart did have distinct visions. For Bush, scientific knowledge itself provided salvation, as if units of wisdom could be manufactured for the preservation of the human race. Engelbart’s view was, befitting its time, more cybernetic: People and technology fed one into the other in a spiral of improvement. The Internet is still young, the web younger still. We do not know what form they will take. The current externalities — now that they are known — are a new feedback piping into the system, which means they can be accounted for in law or code or both. The co-evolution continues.

And I hope that someone, somewhere heard Engelbart died and found his extensive archive and found her mind aflame with new ideas for how humans, working together, can improve themselves. It’s been a rough couple of years for technology, but to quote Bush, “It would seem to be a singularly unfortunate stage at which to terminate the process, or to lose hope as to the outcome.”

Image courtesy of torito99