In the midst of Britain’s industrial revolution, the Luddites protested the impact of rapid technological adoption on working people by sabotaging factory machinery. Weaving frames were smashed, engines jammed, and in extreme instances whole factories burned.

Today, artificial intelligence poses a similar threat to the labor of creatives. Tech companies, perhaps a digital grandchild of Victorian industrialists, build machine-learning models by scraping the work of artists without consent or compensation. A resistance movement is emerging, though its members are armed with coding smarts rather than clubs.

To empower artists in the fight against image-generating A.I. models, a group of computer science researchers at the University of Chicago are releasing (at an as-yet unspecified date) a new potentially disruptive tool.

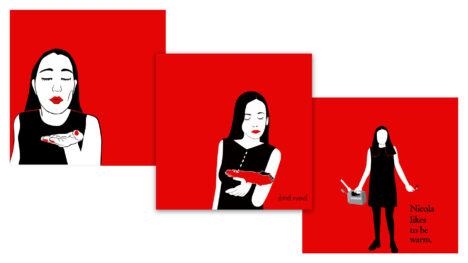

It’s called Nightshade and it uses techniques such as targeted adversarial perturbations to allow users to invisibly alter their images before uploading them to the internet. If such images are scraped into an A.I. training set, it can cause the model to mis-learn image objects and scenery, an effect called “poisoning.”

The stated goal of the tool, as proposed in a paper submitted for peer review to a computer security conference, is to encourage the companies behind A.I. models to negotiate with content owners.

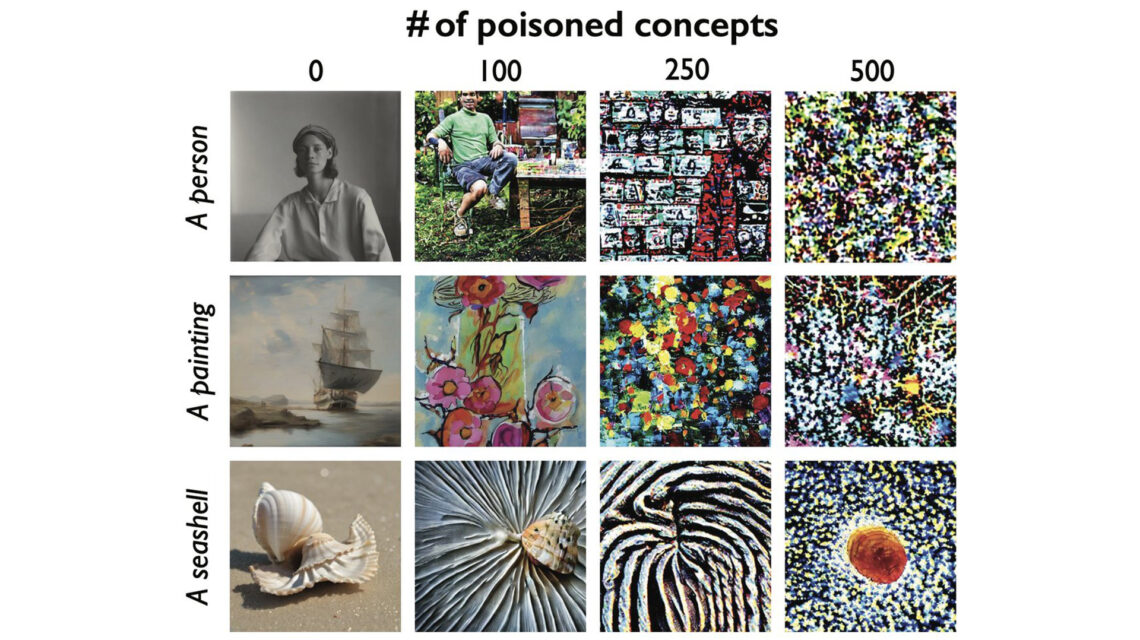

“We show that a moderate number of Nightshade attacks can destabilize general features in a text-to-image generative model, effectively disabling its ability to generate meaningful images,” the researchers wrote. “We propose the use of Nightshade and similar tools as a last defense for content creators against web scrapers.”

The work builds off Glaze, a tool released earlier this year that allows creatives to cloak images by altering pixels and therefore prevent their artistic style from being stolen by A.I. models. The hope is to integrate Nightshade into Glaze.

Nightshade is also more proactive than its forebear. An artist that uploads a poisoned image can manipulate a model into learning, for instance, that a dog is a cat, a handbag is a toaster, and a hat is a cake. Its open source means anyone can play with Nightshade and create new versions. The greater the number of versions and the more numerous the poisoned images, the greater the potential damage becomes to A.I. image models.

The University of Chicago team tested Nightshade on Stable Diffusion. After plugging in 50 poisoned images of dogs, the model began spitting out strange, contorted animals, some with excess limbs and cartoon faces. After 300 poisoned images, Stable Diffusion began issuing cats. The tool also touches associated words, what researchers call “bleed through,” such that canine, wolf, and puppy can also conjure felines. Crucially, undoing the effects of poisoning is difficult and requires each infected image to be removed.

The tool arrives at a moment when A.I. giants including Meta, Google, OpenAI, and Stability AI are being confronted with lawsuits from artists claiming their copyrighted material and intellectual property have been stolen.

Ignoring the inherent challenges of individuals taking on ultra-wealthy companies, the pushback may have come too late given scraped data may have been irreversibly baked into models. In this light, Nightshade offers an alternate and clandestine means of encouraging tech companies to respect the copyright and intellectual property of artists.